You Can Run ChatGPT Locally Now. OpenAI Just Released gpt‑oss‑120B and gpt‑oss‑20B

OpenAI quietly released gpt-oss-120B and gpt-oss-20B. Here’s what they are, what they’re good for, and whether you should care.

Just recently, OpenAI open-sourced two models: gpt‑oss‑120B and gpt‑oss‑20B.

These are open-weight models released under the Apache 2.0 license, which means you can download, inspect, modify, run them locally, and even use them commercially without any restrictions.

They support chain-of-thought reasoning, can call functions, browse the web, and even execute code.

At the time of writing, the larger gpt-oss-120b has been downloaded over 30,000 times, and the smaller gpt-oss-20b over 90,000—just one day after launch.

According to OpenAI, these models were trained in a similar way to o4-mini, and in some cases, even outperform it.

So… can we all now run ChatGPT locally?

Technically, yes.

But there’s a barrier to entry.

You need a decent GPU. If you’re not a gamer, don’t have a solid homelab, and aren’t using a recent high-end laptop, this might be a problem.

There are two models:

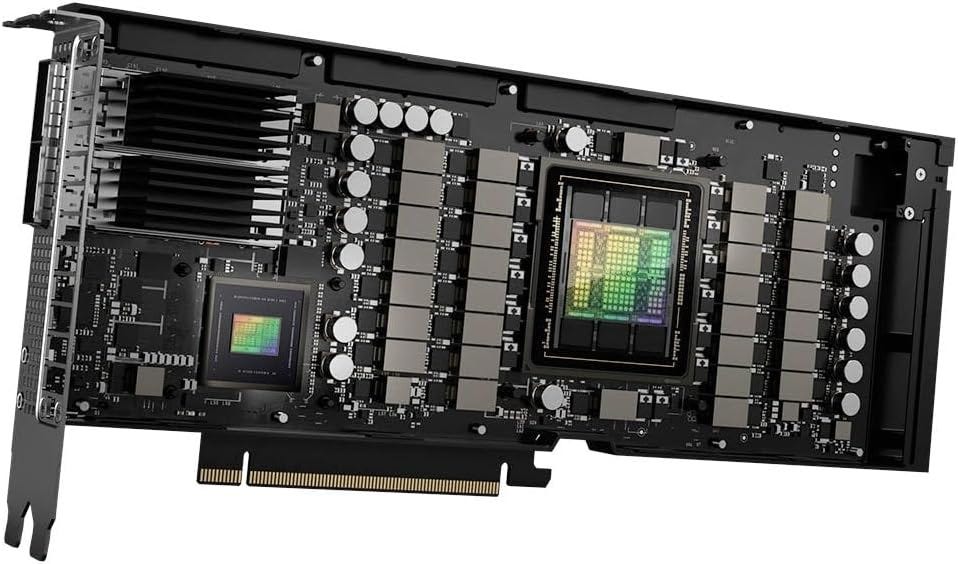

gpt-oss-120B has almost 120 billion parameters and “can fit into a single H100 GPU” (according to OpenAI).

The H100 GPU is a high-end card with 80 GB of HBM3 (High Bandwidth Memory). It’s one of the most widely used GPUs in AI data centers.

Looks cool, right?

Well, one important thing about this “cool” GPU: it costs over €40,000.

Not something most of us have at home.

However, you can rent one from services like Vast.ai, with an hourly rate of around $2.

That’s affordable for playing around, but gets expensive if you're using it heavily every day.

So, with gpt-oss-120B being out of reach for most personal setups, that leaves us with gpt-oss-20B.

And that’s better news.

gpt-oss-20B requires only a GPU with 16 GB of memory. This still requires a high-end laptop or desktop, but much more realistic for personal use.

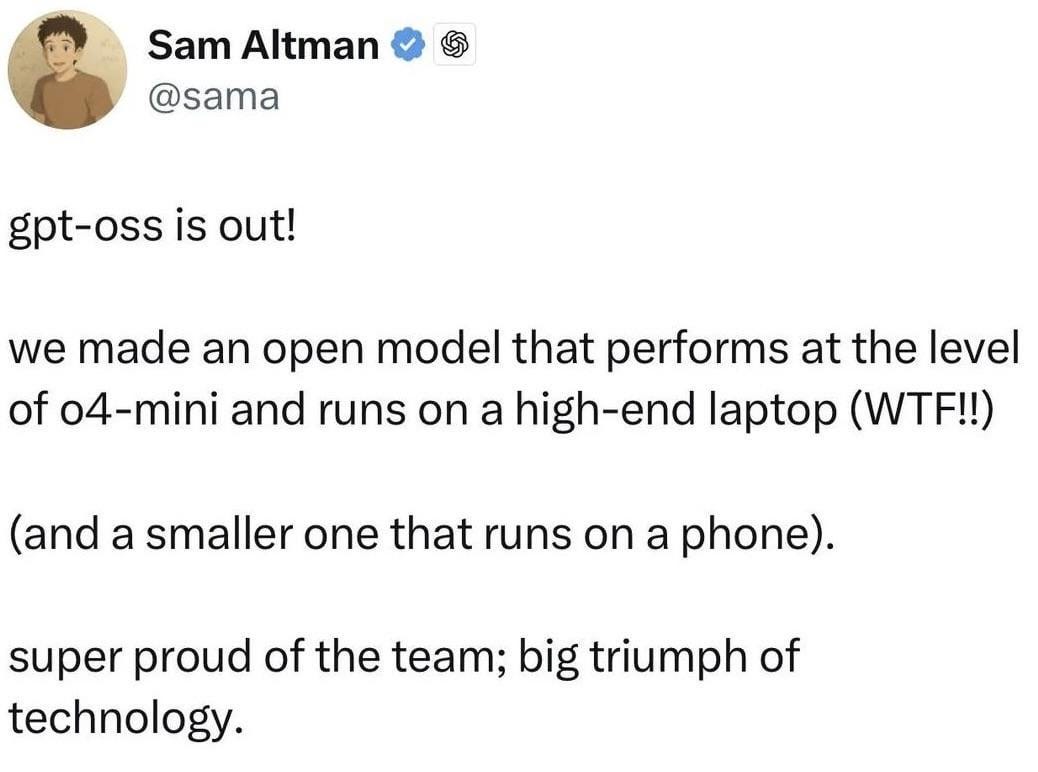

Or if you're Sam Altman, apparently you can run it on your phone:

Why use gpt-oss at all?

Privacy.

The recent chaos around “our ChatGPT chats are being indexed by Google” proves user privacy is often at risk.

Sometimes users mess up, and sometimes companies do - whether through own mistakes or vulnerabilities that were exploited.

Running these models on your own infrastructure gives you more control.

Sure, you can still mess things up yourself. But at least you’re the one in charge.

Since it doesn't run on cloud servers, there are technically fewer attack vectors.

No “government is reading my chats” paranoia (well, maybe still, depending on how deep that goes).

If you’ve got the GPU, you don’t need to pay for API access. Just load the model and use it. The only cost left is electricity, which can add up if you’re using it heavily.

Should we all switch from ChatGPT to gpt-oss?

By “we,” I mean the general public. Not developers or tech-savvy people, but regular users.

And the answer is: not yet.

ChatGPT is still easier. You don’t have to maintain anything. No setup headaches. It just works.

Also, these new models haven’t been battle-tested by millions yet. There might be weird bugs, edge cases, or performance issues that still need to be worked out.

But for freelancers and organizations, my answer would be a yes.

If you are a freelancer heavily using AI for your client work, it does make sense to have a model hosted locally. You’re not sending sensitive stuff over APIs to a third party. And depending on your setup, it might save you money.

For companies, it’s almost a no-brainer. You can run models internally, control access, improve privacy, and likely cut costs long-term.

Can it be used for malicious intent?

That’s always this concern with AI.

OpenAI claims they filtered out harmful content (chemical, biological, radiological, nuclear data, etc.) during pre-training, and tested how bad actors might try to fine-tune it for malicious purposes.

So, in theory, it shouldn’t be easy to remove the “filters” and use it for unethical stuff.

Therefore, I believe as the model was pre-trained to exclude such things, there shouldn’t be a way to remove a “filter” and use it for bad intentions. But I guess we will figure this out soon once the broader community starts poking at it.

So far, the general feedback is that the models are heavily censored. Some even say more censored than the ChatGPT models

How to try it?

There’s a playground at:

If you want to try it yourself: