Scam Raven: One Month In (Update 2)

78 reports, first clicks from Google, and where the AI struggles.

Hey everyone,

It’s been less than a month since I bought the domain for Scam Raven. In my last post, I shared the idea and the first steps I had already taken.

Since then, I’ve made some progress, so here’s an overview of the current situation.

Scam Reports

I’ve been fine-tuning the code that collects data for AI and refining the prompt itself. I’ve found a formula that produces results that are “good enough” for now.

The outcome of each report isn’t perfect. There’s still room for improvement, but using this setup I’ve managed to generate 78 reports.

On average, reviewing one report takes me about 6–8 minutes.

Here’s how they look :

Legit - 75.6%

Suspicious - 16.7%

Scam - 7.7%

Ideally, I’d like to see more reports fall into the Suspicious category. The problem with Scam sites is that many are short-lived and once they die, nobody looks them up. Legit sites, on the other hand, are hit or miss: some raise credibility questions, others are solid.

Suspicious sites feel like the sweet spot. They’re existing businesses with certain flaws or red flags that raise questions for new users. But that’s just my hypothesis. Organic traffic to specific reports over the next few months will show what really works.

First Results

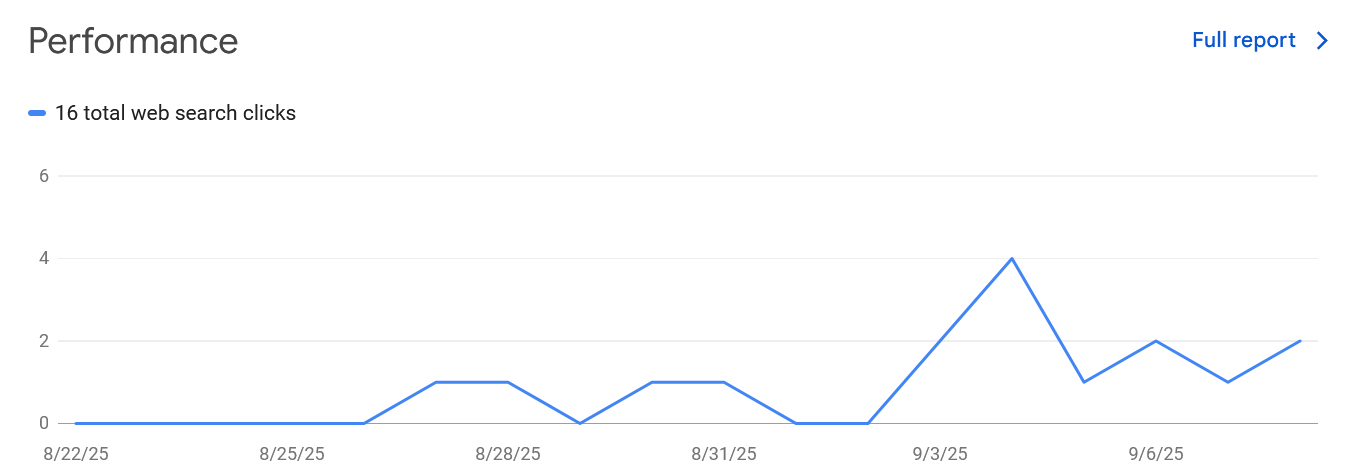

This is a brand-new website, so I don't expect much, but I’m already seeing some organic search clicks each day.

Definitely seen better results, but even a few clicks provide a small boost of motivation.

“The Engine”

I briefly mentioned this in my initial post, but here’s how the reporting system works right now:

I run technical checks written in Python.

I summarize the generated data.

I generate a prompt for AI with that data.

I use GPT-5 to generate reports in a consistent format.

Building this didn’t take too much effort since I knew exactly what I wanted. Vibe coding helped save time too.

The results from AI are good enough that I don’t see a reason to build custom logic. I collect data through 9 technical checks, feed it to the AI, and get results that are decent, but not polished enough to publish without editing.

Sometimes the reports contain AI artifacts, such as:

“- No clear Terms, Privacy Policy, or company registration details were included in the provided content samples.”

This shouldn’t appear in the public report.

Challenges

Outdated Links

One big challenge is links. Even with GPT-5, the reports often include non-working or outdated links, especially to sources like G2, Capterra, Vogue Business, Reuters, or app stores.

My guess is that the AI references old data and it doesn’t actually fetch live URLs in real time. The facts are usually correct for well-established sites, but the links often break due to minor changes in permalink structures. Reviewing links manually is by far the most boring part of the process.

Gray Areas

Rating websites isn’t always straightforward. Sometimes AI and I disagree, and in those cases, I override it.

For example: I scanned a cashback platform (Aliexpress, Ozon, Sberbank, Gazprombank, etc.). Technically it looked “legit enough,” and AI labeled it that way. But it’s a russian business. There’s no way I’ll mark it as legit given the current situation.

AI fails to provide good judgment when a website falls into the Suspicious category. It thrives with legitimate sites and obvious scams, but when it’s somewhere in between, things get tricky.

For example, AI marked a well-established service website as Suspicious because the TLS certificate was issued for another domain (.be instead of .com). It was also a Greek website, so the AI flagged “odd characters/encoding.” As a result, the site was labeled Suspicious. But the collected indicators only showed that the website wasn’t polished or carefully maintained - not that it was a scam.

Cases like this have to be carefully reviewed. Otherwise, I risk accusing real businesses of being Suspicious, which could result in a cease and desist.

I’ve even seen Reddit posts where business owners raged and talked about taking legal action against automatic scanners that rated their site poorly due to something as simple as a new domain.

And Now I Wait

The first steps are done, and now it’s time to wait before making big changes. A few reasons why:

New domain: Scam Raven is less than a month old. It takes time to build trust with Google. Established domains with backlinks can rank immediately, but new ones usually can’t.

Search Console data: I need to see what people are actually searching for, which reports attract clicks, and which don’t. Right now it’s shooting in the dark.

Sustainability: This is a fun project without deadlines. Last Sunday, I had an intense session generating and reviewing new reports. On Monday evening, I felt a bit burnt out. To keep this sustainable, it’s better not to overdo it - unless I start seeing a decent return on my time.

What I’ll Do in the Meantime

While I wait for Google search console data, here are some things I’ll keep working on:

Reach a symbolic number of 100 reports (22 to go).

Improve tools - automate taking screenshots instead of doing them manually.

Get backlinks - no buying, just low-hanging fruit.

Add informational posts - to balance AI-generated reports with original content. This ratio is crucial for credibility in the eyes of search engines.

Also a bit more love for my Substack. I’ve got a few ideas for upcoming posts. One of them is a deep dive into the scraping business. I’ve got insights from my own projects and tools that I think would be valuable to share.